Jie An [安 捷]

Hi! I am an Applied Scientist at Amazon AGI, where I work on image and video generation models as

well as omni understanding and generation models. Previously, I was a Research Scientist at Meta

Reality Labs, where I worked on 3D generation and world modeling. I received my Ph.D. in Computer

Science from University of Rochester, advised by Prof. Jiebo Luo, where I received the ACM SIGMM Outstanding Ph.D. Thesis Award.

Earlier, I earned my bachelor's and master's (with honor) degrees in Applied Mathematics from Peking University, advised by Prof. Jinwen Ma.

I was a research intern at Apple (Seattle, 2024–2025), working with Prof. Alexander Schwing. Before that, I interned at

Microsoft Cloud & AI (Redmond, 2023–2024, now part of Microsoft AI) and Meta FAIR (New York

City, 2022), hosted by Dr. Zhengyuan Yang and Prof. Harry Yang.

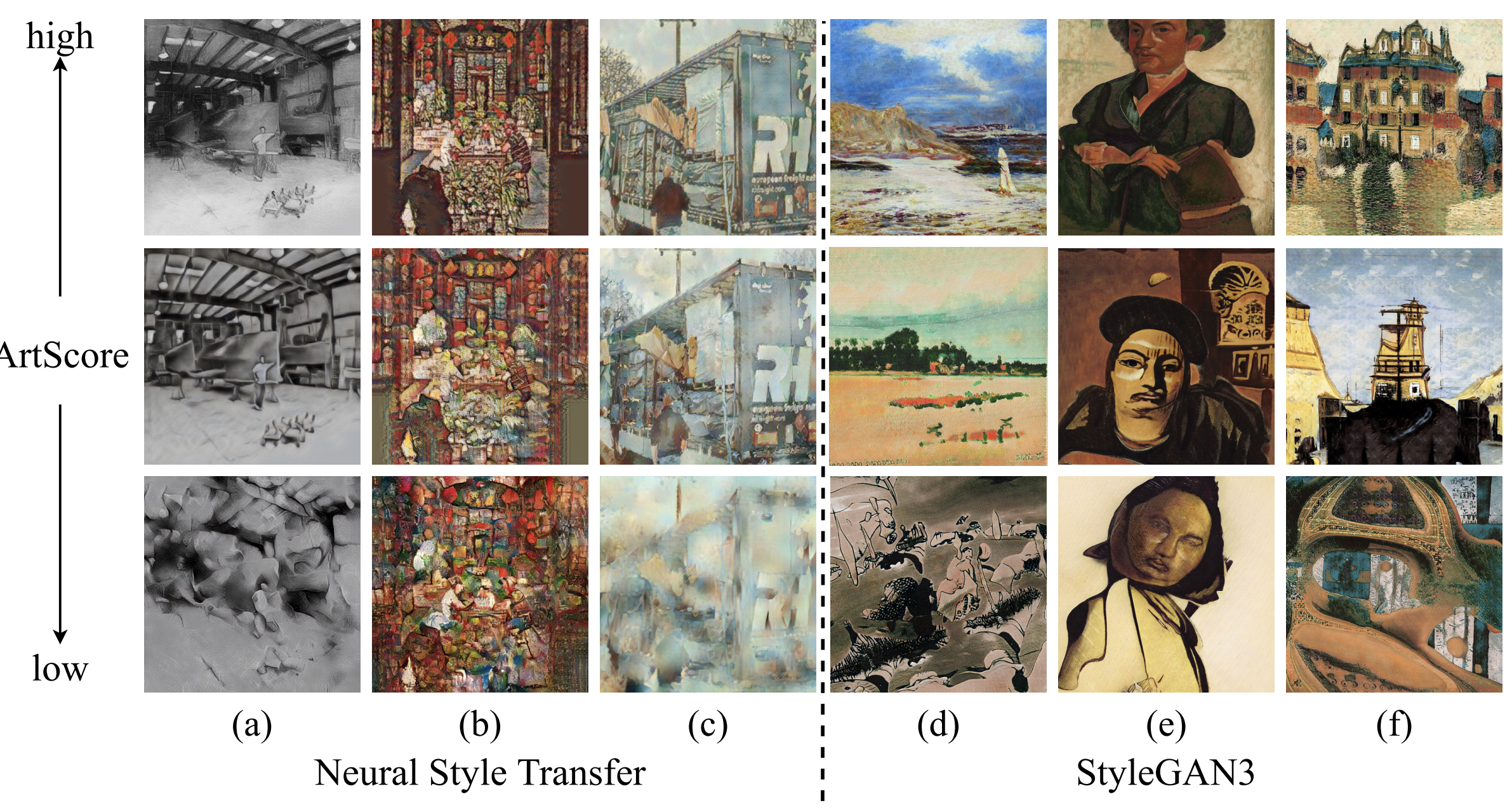

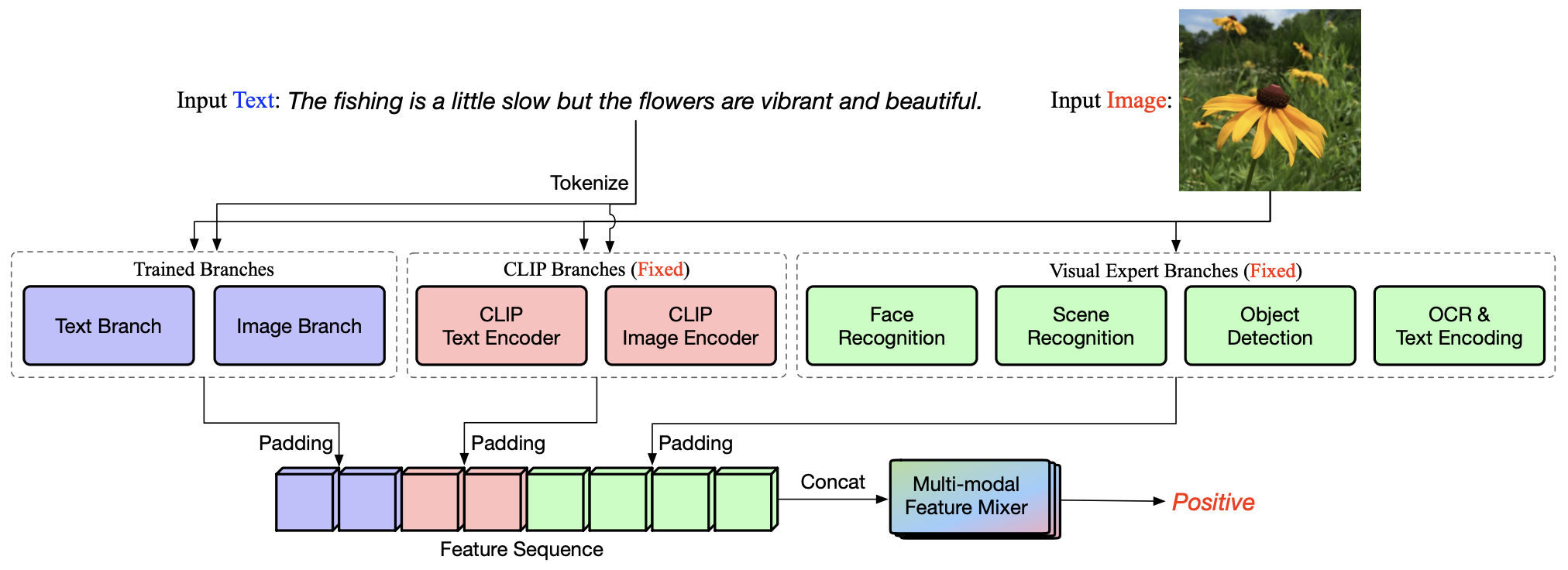

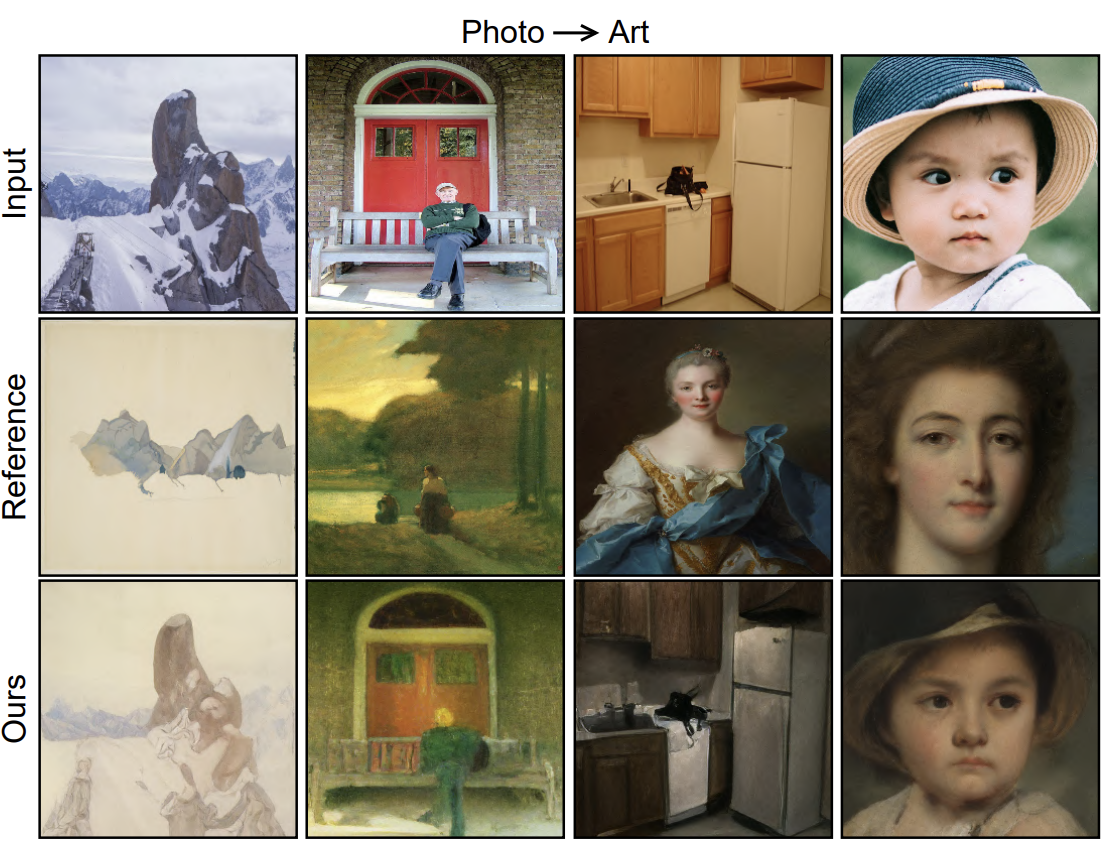

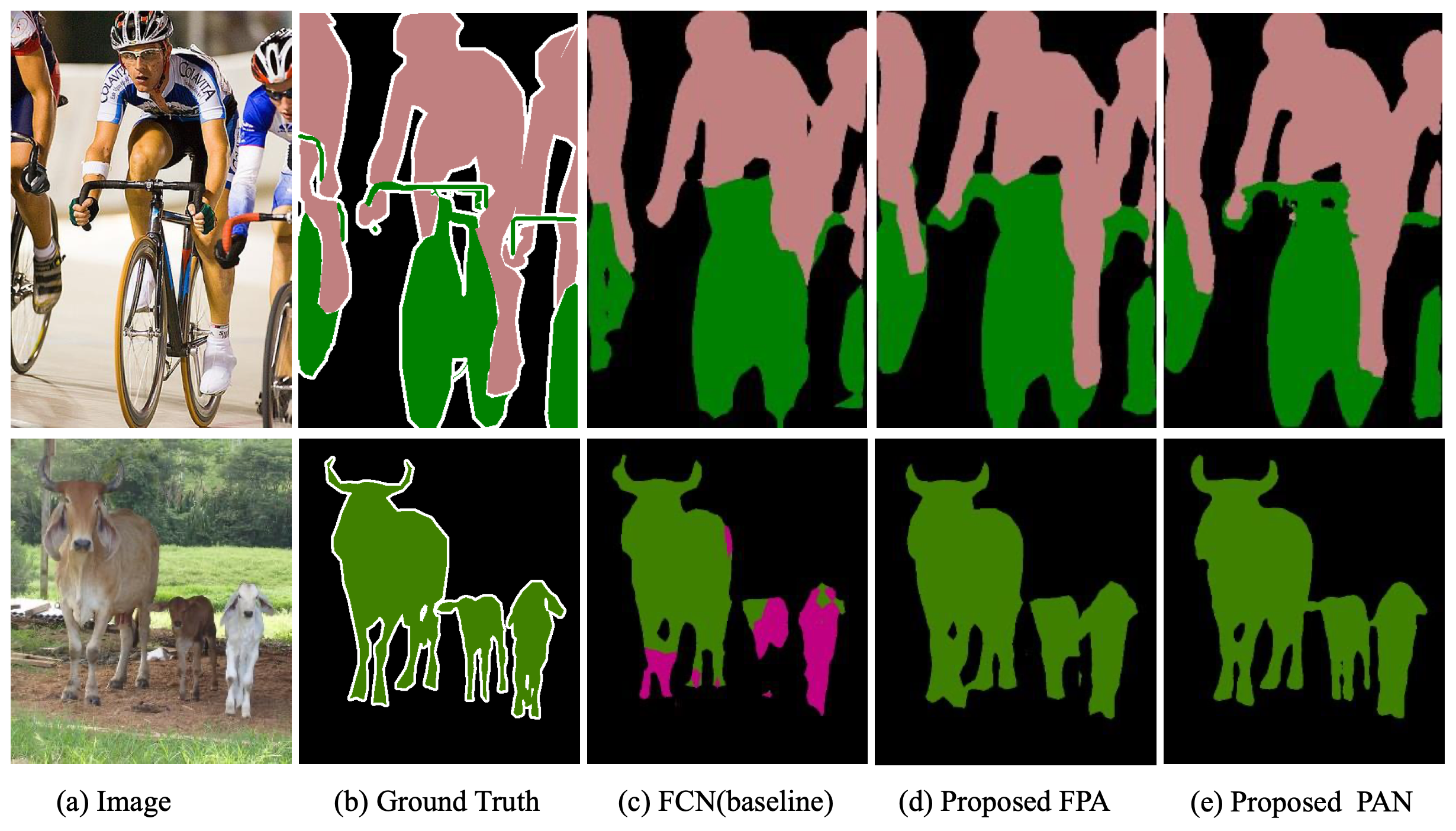

My research primarily focuses on visual content generation. I am particularly interested in

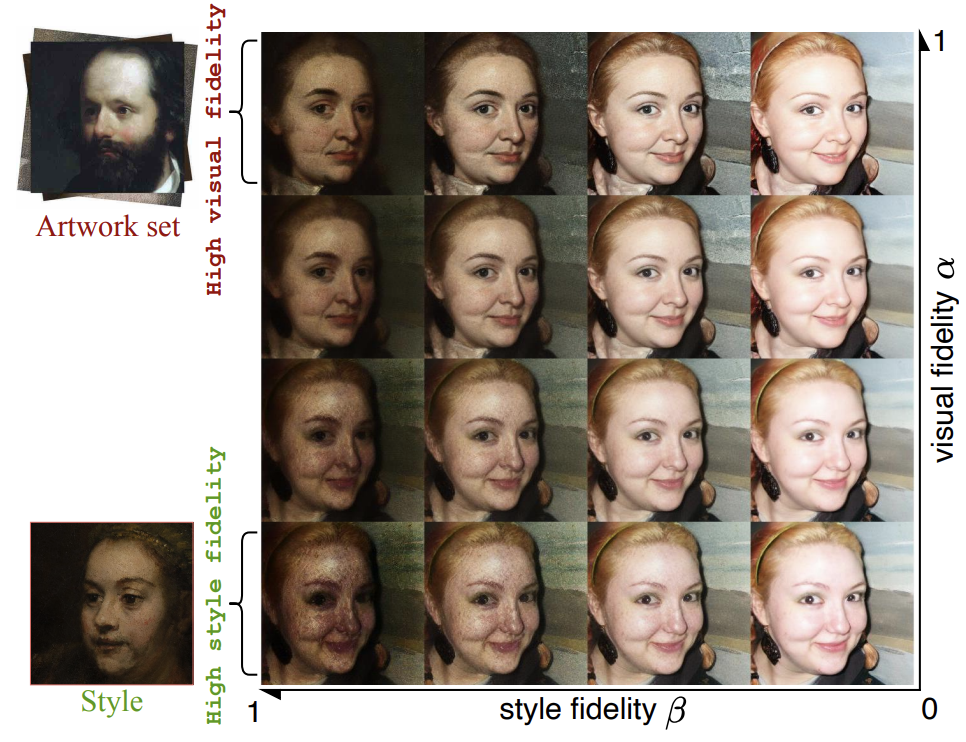

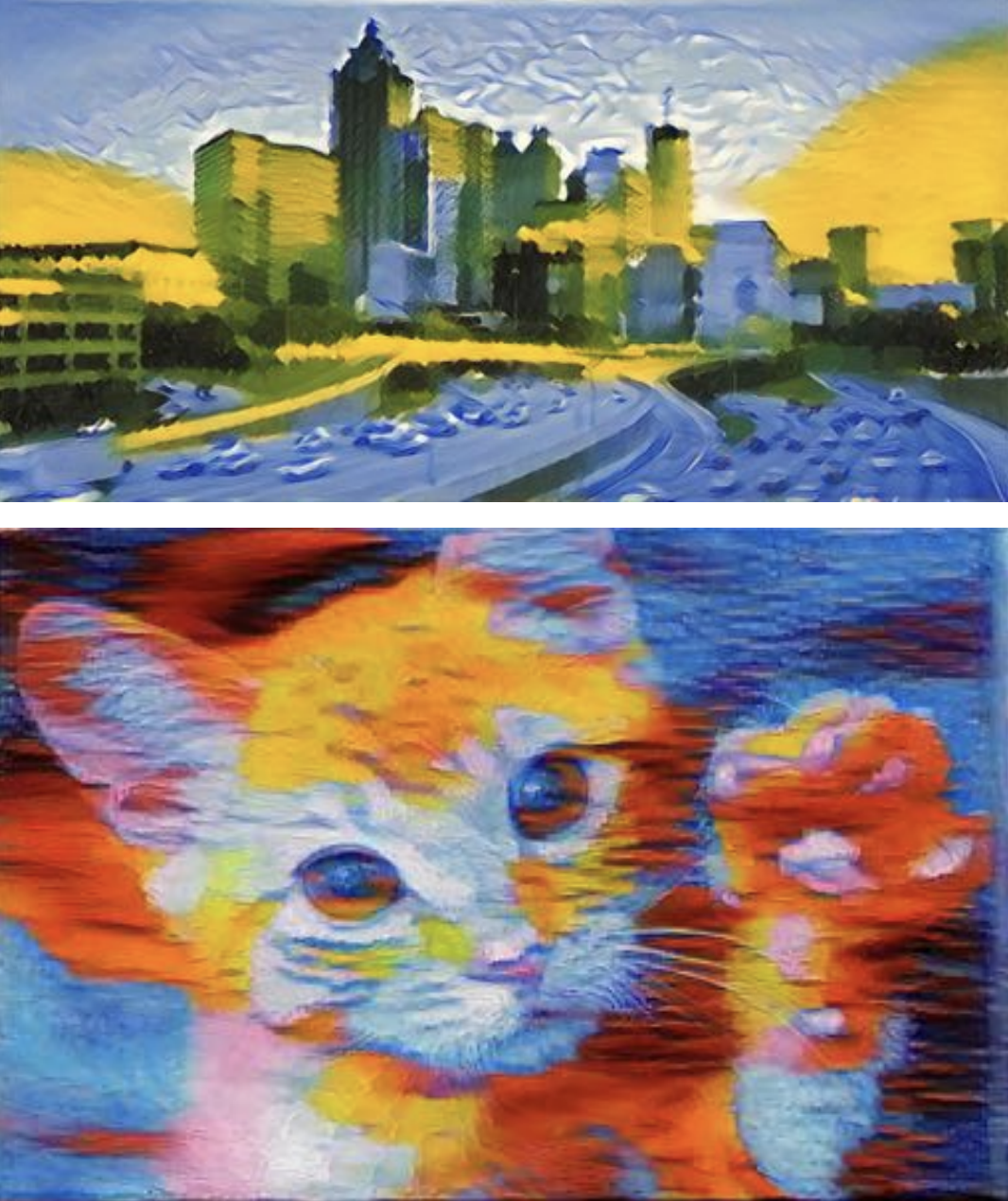

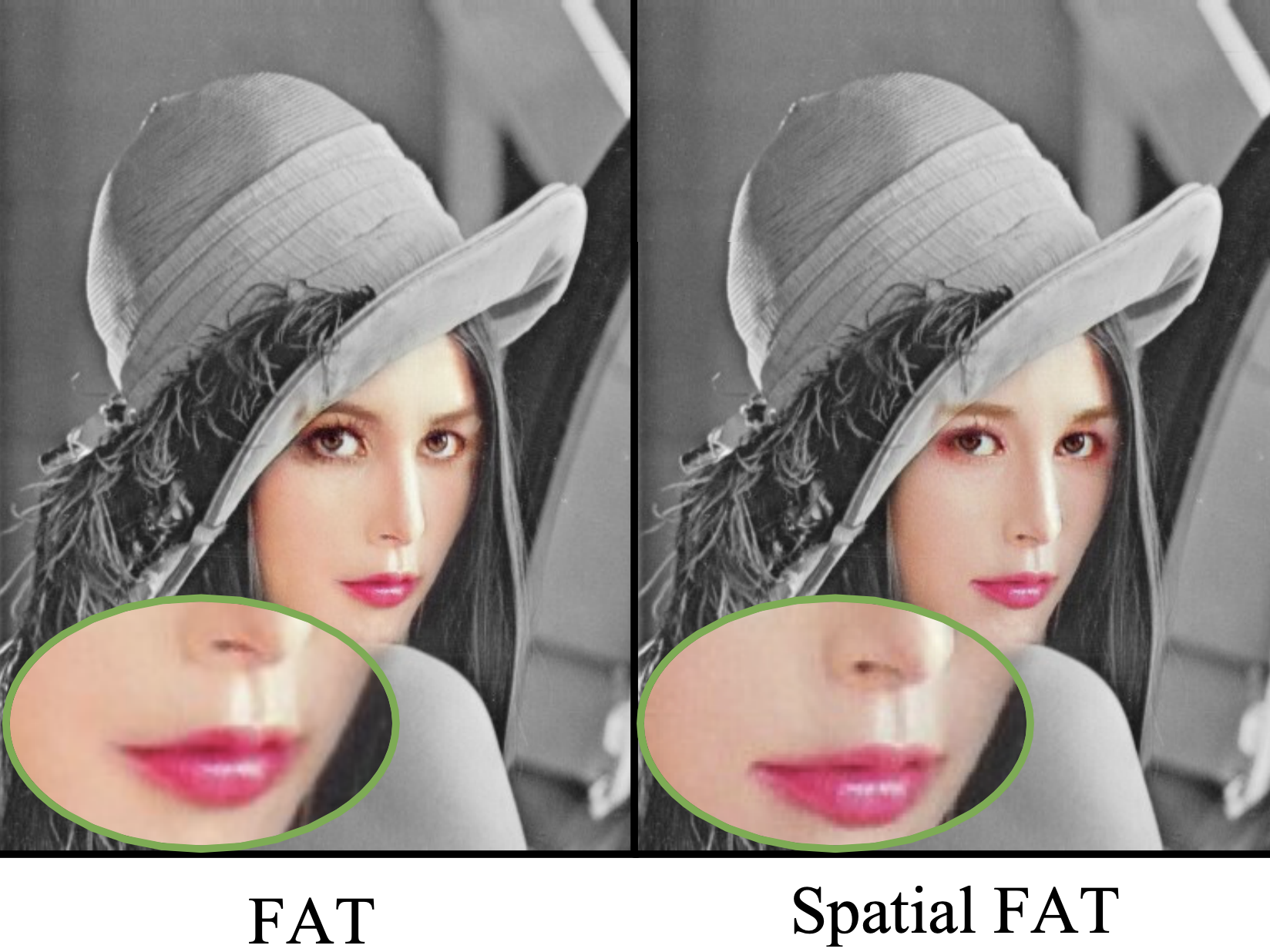

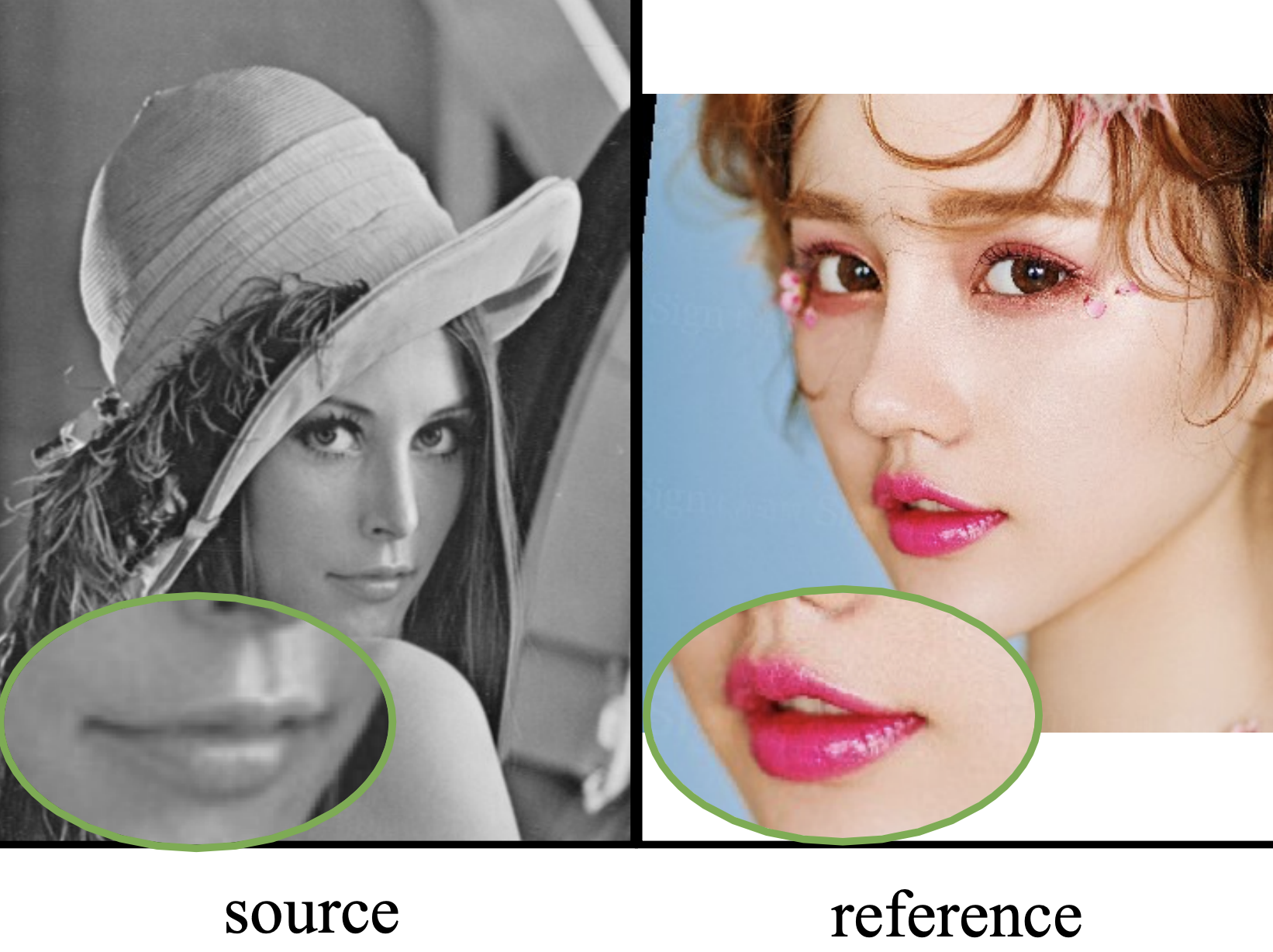

foundational generative models, text-to-video generation, physics AI/world modeling, and artistic

generation/style transfer. My research is driven by a long-term vision: from developing a

fundamental understanding of generative models, to building increasingly capable foundation models,

and ultimately advancing toward visual artificial general intelligence (VisualAGI).

Contact: pkuanjie [at] gmail [dot] com

Email /

Google

Scholar /

Github /

LinkedIn

/

Name

Pronounce